on server migration

Recently I migrated my Virtual Private Server to a green hosting provider. My server runs all the web services on *.simonxix.com including my website, an onion service of my website, several web app experiments, my Joplin Server where I keep all my notes, an old WordPress site, an old Sandstorm platform, a couple of other open source applications, and the Ghost platform for this newsletter. I’d been putting off migrating this server for a while but it gave me a good opportunity to rethink what I want from a server and my deployment structure. None of this is revolutionary but hopefully it's an interesting insight into considerations when putting together a server for open source software deployment.

why migrate?

My old server was hosted by VPS.net and was registered in 2015 as a server for the now-inactive Radical Librarians Collective as a space where we could experiment with autonomous tech and open source infrastructure. We installed Sandstorm on the server which served us well as a quick and dirty space for document management, collaborative writing, and an old-school forum for intra-collective communication. I continued to maintain the server and the Sandstorm platform while gradually building my own infrastructure haphazardly on top of it. Over time, I layered on websites served through Apache, replaced Apache with Nginx, and eventually switched to a Docker-based deployment structure. The technical cruft from this haphazard deployment and regular odd experiments as well as the server’s meagre 1 GB of RAM meant the server was straining a bit.

I was also very aware of the carbon footprint of cloud servers [1] and was keen to move to a green and ethical hosting provider. I’d looked at various green hosting providers but never found a compelling enough offer to commit to migrating.

Fortunately my Copim colleague Toby Steiner brought Infomaniak’s cloud hosting to my attention. Infomaniak is a Swiss hosting company who I knew for kMeet, an open source video conferencing platform mentioned in a previous post, and kSuite, an open source productivity suite that replaces the Microsoft SharePoint / Teams / Office infrastructure for collaborative working and document management.

But I wasn’t aware of Infomaniak’s green VPS hosting. All their data centres use 100% renewable energy and low-voltage technologies [2] and offer more server resources for less money than other green providers I’d investigated. My new server has 4 CPUs, 4 GB RAM, and 80 GB of hard disk space for €9.00 per month compared to the 4 CPUs, 1 GB RAM, and 51 GB of hard disk space that I was getting from my old provider for £9.00 per month.

deployment structure

The reduction in carbon emissions was the main driver for migrating but it also presented a good opportunity for rationalising the mess that my old server had become and rethinking my deployment structure from the ground-up. After checking off the basics on my new server (setting up a sudo user, setting up SSH keys, setting up a firewall [3], setting up SFTP, setting up Git and an SSH key on GitHub), I was ready to think about the structure.

I use a containerised approach based on the model that I inherited when I took over maintenance of the COPIM project infrastructure. A container is a type of virtual machine that contains only the application and the dependencies to run that application. Each container is like an extremely lightweight computer designed to run one piece of software and only that one piece of software. It means you’re not installing sometimes conflicting dependencies on your host server and it isolates applications from each other so they’re not interfering with one another’s operation. My model, based on the COPIM model, is a Docker container for each service and piping each container’s outgoing web traffic through Nginx reverse proxy running in another Docker container.

Unfortunately I had been running all my Docker containers in the same Docker Compose stack. Docker Compose allows you to define docker-compose.yml files as blueprints for Docker containers and how they are networked together and I was running all my services from a single file. This made it easier to take the whole stack up or down but lost out on the ability to modularly take a single server down without impacting on the other services. I was also using the same MySQL and PostgreSQL database containers for multiple applications [4] which is not Docker best practice: it’s better to use a different database container for each different database so that you can take down an application and its database in complete isolation from other services if you need to.

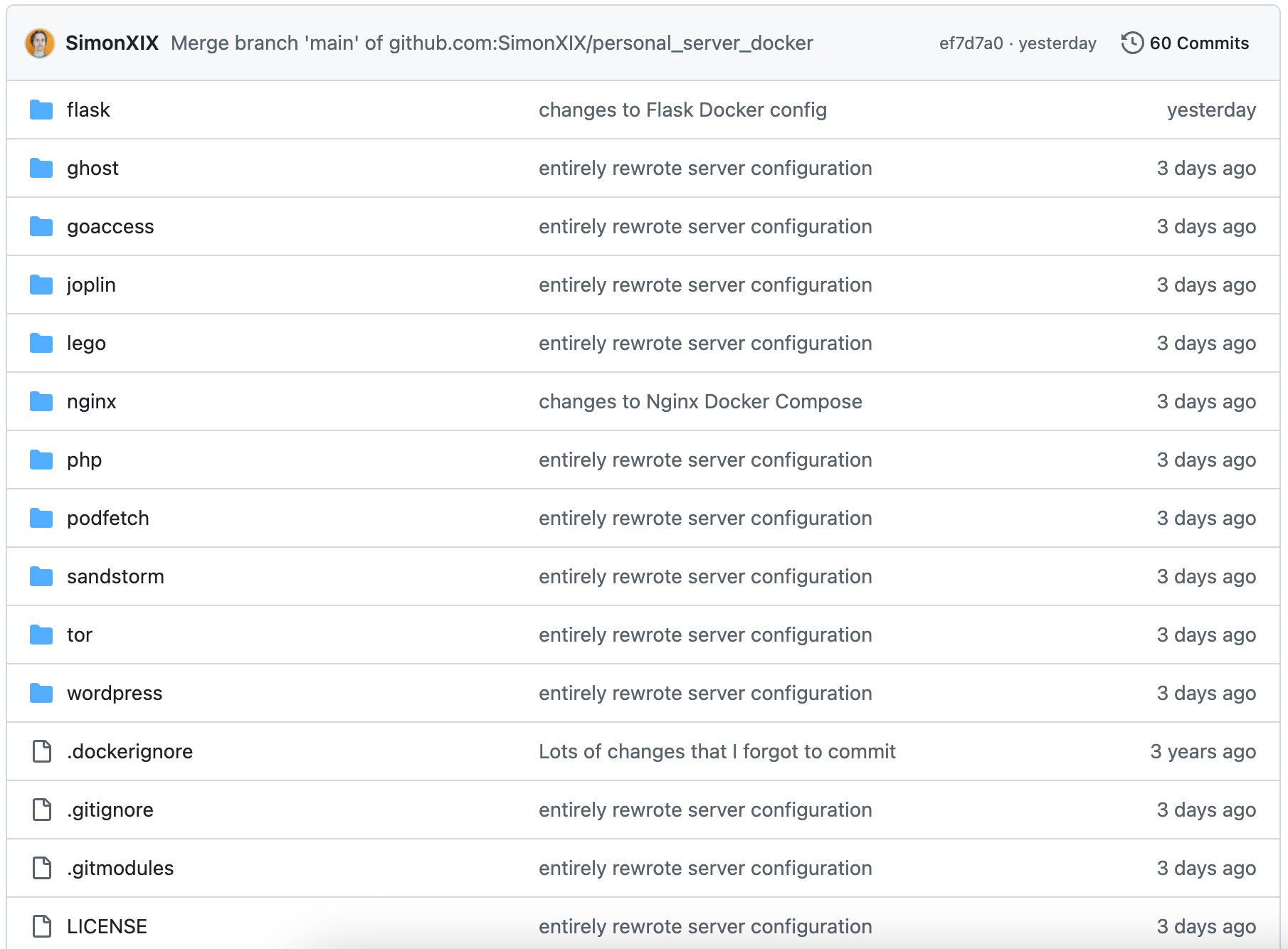

Hence my major deployment change was separating all my services into separate Docker Compose stacks. I set up a directory structure where each service had its own subdirectory, wrote separate docker-compose.yml files for each one, and carefully moved across all the configuration files and .env environment variables files. This was easy for small web applications like my main website for which I could simply clone the GitHub repository: it was more involved for services like Ghost and Joplin which required exporting contents between sites. The worst was the old RLC Sandstorm which has no built-in export feature and required me to tarball the old /opt/sandstorm/var directory, FTP it over the new server, unpack it, and then spend hours sorting out the extremely finicky file permissions on that directory that Sandstorm requires in order to run [5].

Now each service is isolated in a way that should make it easier to, for example, take down WordPress and its database without taking down all my other services. Hopefully this also makes it easier for anyone who wants to copy my Docker Compose files and Nginx configurations for their own deployments but don’t want my full idiosyncratic infrastructure. There’s almost certainly a better way to do this using Portainer or Docker Compose’s extends attribute but I also wrote a quick Bash script to easily manage bringing all the Docker Compose stacks up or down should I need to do so.

I thought this server migration would be a pain but the opportunity to rethink my deployment turned out to be really valuable. It’s good to have a more ethical Virtual Private Server that costs me less each month but it’s also good to have a tidy server that doesn’t feel as ramshackle as my old one used to feel. When I find the time, I’ll definitely be bringing this revised structure to some of the other servers that I manage at work.

According to calculations by GoClimate, a cloud server using non-green electricity uses approximately 487 CO₂e per year. ↩︎

The PUE (power usage effectiveness) of their latest data centre is less than 1.1 which makes it the greenest data centre in Switzerland. ↩︎

There is already an Infomaniak firewall that I can configure through their management panel but it couldn’t hurt to have another. ↩︎

Specifically, a single MySQL container contained the databases for both WordPress and Ghost while a single PostgreSQL container contained the databases for both Joplin and Podfetch. ↩︎

Plus spending ages trying to figure out what permissions could possibly be missing before discovering that Ubuntu 24.04 handles AppArmor kernel security slightly differently than earlier versions and that this was the issue: https://groups.google.com/g/sandstorm-dev/c/4JFhr7B7QZU ↩︎